A brief history of enterprise data challenges

November 16, 2020

Today, the data ecosystem is thriving, buzz words are everywhere and new product names pop up every day. It makes it hard for people that step in the field to see the signal in the noise.

In this article, I try to take a step back and explain what the current ecosystem is made of. Why do we have this multitude of products and where does every part fit in the modern enterprise ? There will be quite some simplification. In reality, every company is different and has different needs.

Early 2000s : The rise of the internet and data volumes

With the rise of the internet, companies struggled with the growing number of data sources they had to handle. Company data got siloed in different relational databases. This prevented companies from quickly getting data analytics and actionable insights about customers, sales…

Data warehouses provided a solution by bringing together all the siloed relational databases into one single source of truth, used to provide a 360° view of customer data.

Then, many giant tech companies started collecting huge amounts of data and needed to come up with new ways of storing and processing it. It couldn’t be done by a single computer anymore. Hadoop was born in 2006.

Hadoop is a set of software tools that enabled distributed processing (multiple computers) on huge datasets.

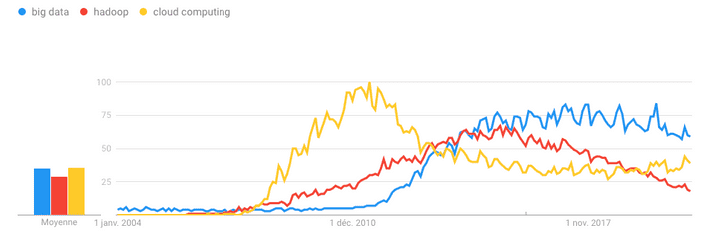

Then, many engineers left these giant companies to start their big data start ups, and got VC funding. By 2010, the Big Data hype was on.

In 2010, Gartner placed Cloud Computing as Number one strategic technology. Cloud Computing provided the necessary infrastructure for Big Data : computing power, storage and networking.

Cloud Providers also provided startups with an easy way to start and scale their business (infrastructure as a service) without having to manage their own IT infrastructure.

2010 to 2015 : Cloud transition and the shaping of modern data warehouses

Nevertheless, during the early 2010’s, the majority of large companies were still hesitating about whether to stay on-premise or move to the cloud, and kept waiting for a major Big Data service provider that made things easier.

Only early adopter large companies made the move to invest in Big Data technologies. It turned out that it wasn’t a simple technology adoption, but it needed a company wide data driven culture, new job titles and a multitude of complex data processes.

During these years, a pattern change happened for data warehouses.

Due to data volume increase and data variety, as well as due to the rise of business analytics expectations, companies started experiencing a bottleneck on the staging layer of the data warehouse. It usually took weeks or months to integrate a new data source in the Extract - Transform - Load pattern.

In addition to structured transactional data, companies started to collect more and more behavioral data with a fast changing schema.

Finally, there was a realization that storage costs were far more affordable than before. Computation power of the data warehouses also went up. Therefore, it made sense to load the data in the warehouse before transforming it.

3 shifts started happening :

- From ETL (Extract-Transform-Load) to ELT (Extract-Load-Transform)

- From On premise to the Cloud : Cloud service providers could provide easy and elastic data warehouses that fit the company needs.

- from Hadoop to new Data Lakes: Data Lakes are centralized raw data staging areas, (It could be a simple Amazon S3 repository or Google Cloud Storage, or it could have more capabilities)

Moreover, the rise of infrastructures that can handle huge amounts of data paved the way to the rise of IoT and connected objects which found a lot of success. This, in turn, increased the amount of data some companies had to handle.

2015 - 2017: Data Plumbing and AI Hype

The main Big Data infrastructure seams clearer to most companies, the main challenges are now about how to easily and quickly ingest data and how to quickly make it available to analysts and business users.

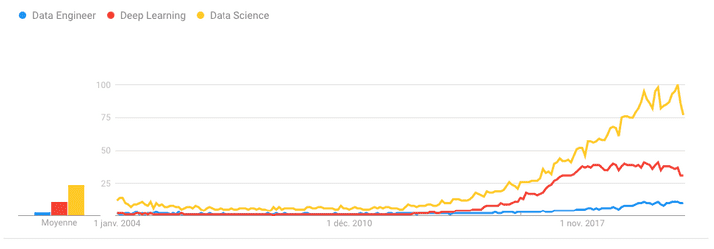

Data Engineers are hired to take care of the data plumbing around the modern data warehouse. We see the rise of data flow automation tools like Apache Airflow. These tools enable companies to automate the ingestion and the transformation part of a data pipeline.

We feel that NoSQL loses some momentum and SQL is officially back with tools that allow SQL queries on unstructured and structured data warehouse data. (Snowflake, BigQuery)

The “Big data” hype reaches a plateau, not because it’s not relevant anymore, but because it’s now a reality. A new field retains most of the media attention. Artificial intelligence is the new gold. Following some deep learning advancements, Machine learning gains a lot of attraction.

This, democratizes the operational use of AI to explore and offer data powered products. (Recommendation systems, Predictive maintenance …) . As a result and thanks to the stabilization of ML methodologies, we saw the birth of DataOps collaborative platforms that enable companies to easily experiment with ML models on business data.

For large organizations: if you’re not actively building a Big Data + AI strategy at this point (either homegrown or by partnering with vendors), you’re exposing yourself to obsolescence. - Matt Turk

2018 - 2020: Current challenges

From 2018 to 2020: Most of large companies started or are going through their Cloud transition.

Engineers kept on working on tools to access and process raw data easier and faster.

- Some tools like (dbt) made it possible to use SQL as the transform layer.

- Stream processing approaches are getting more attraction.

Here are some of the challenges companies are facing today :

- Hybrid Cloud : In order to avoid vendor lock-in and optimize costs, many companies are opting for hybrid approaches with a mix of private cloud, public cloud and on-premise.

- Data cataloging and meta data management : Data and data sources are the base of this whole systems. They need to be properly indexed, enriched and referenced to make it easier for anyone to find them and know how to use them. In the same vein, we see the need for “feature catalogs” needed in the machine learning pipeline.

- Data quality : Data quality means ensuring data integrity, consistency, availability and usability at every stage of the data pipeline.

- Security and privacy : Following the Facebook Cambridge Analytica scandal, privacy issues became more important for companies and for the general public. New regulations like the GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act) introduced new data tracing and security constraints. Companies need easy solutions to centralize data access controls. The use of data must be traced and controlled.

Below, you’ll find a quite complete reference architecture diagram of the modern data infrastructure. It was made by Matt Bornstein, Martin Casado, and Jennifer Li after interviewing 20+ corporate data leaders and data experts. [Source]